What is Log File Analysis?

Log file analysis involves reviewing the data stored by a website’s servers in the form of log files, which record every request made to the site. This process is an essential part of technical SEO. In SEO, log file analysis provides valuable insights into how Googlebot and other web crawlers interact with a website. By examining log files, you can identify problematic pages, understand the crawl budget, and gain other critical information related to technical SEO.

To better understand log file analysis, it’s important to first know what log files are. These records are created by servers and contain data about each request made to the site, including the IP address of the requesting server, the type of request, the user agent, a timestamp, the requested resource URL path, and HTTP status codes.

Why is Log File Analysis Important?

Log file analysis is critical in technical SEO because it provides valuable insights into how Google and its crawlers interact with your website. By examining log files, you can track:

- Crawl Frequency: How often Google crawls your website.

- Page Crawl Rates: Which pages are most commonly crawled and which ones aren’t.

- Crawl Budget: Whether there are problematic and irrelevant pages that waste search engine resources.

- HTTP Status Codes: The specific HTTP status codes for each page on your website.

- Crawler Activity: Sudden changes, like significant increases or drops, in crawler activity.

- Orphan URLs: Non-intentional orphan URLs, which are pages with no incoming internal links that can’t be crawled and indexed.

How to Do a Log File Analysis?

1. Access the Log Files

Log files are kept on the server, so you will need access to download a copy. The most common way of accessing the server is via FTP - such as Filezilla, a free, open-source FTP - but you can also do it through the server control panel’s file manager.

Issues to Consider:

- Partial Data: Log files might contain partial data scattered across several servers.

- Privacy Compliance: Log files contain users’ IP addresses, which are considered personally identifiable information.

- Limited Storage: Log files might only store data for a few days.

- Unsupported Formats: Files tend to be formatted in ways that need parsing before analysis.

2. Export and Parse Log Files

Once connected to the server, retrieve the log files you’re interested in analyzing, which will most likely be the logs from search engine bots. You may have to parse the log data and convert it into the correct format before proceeding to the next step.

3. Analyze the Log Files

You could simply import the data to Google Sheets, but it can quickly add up. The more efficient option is to use specialized software designed to handle large datasets.

Recommended Tools:

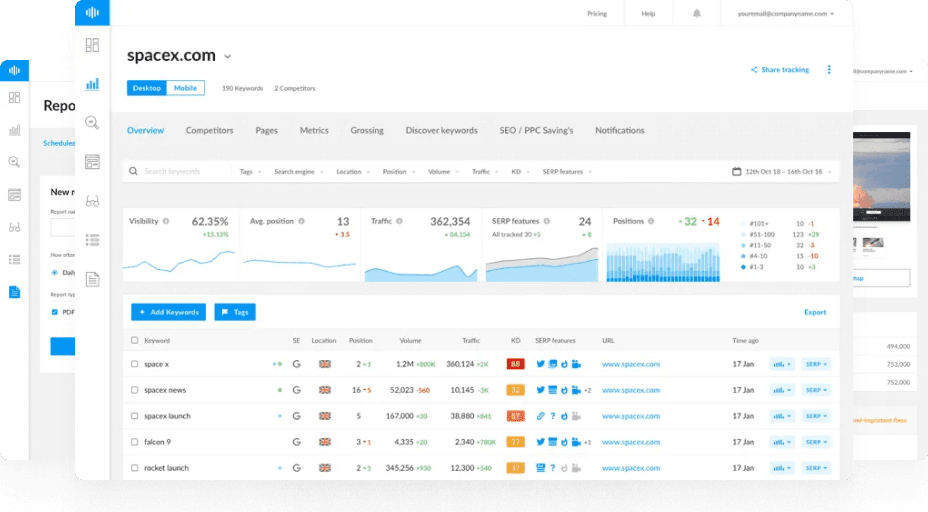

You can also use Ranktracker’s Site Audit tool to get more data and combine it with the log file data.

Key Areas to Focus On:

- Status Codes: Identify HTTP errors (non-200 status codes such as 404 Not Found and 410 Gone errors).

- Crawl Budget: Note potential crawl budget wastage.

- Crawler Activity: Check which search engine bots crawl your website most frequently.

- Crawling Trends: Monitor crawling over time for any significant changes.

- Orphan Pages: Look for pages that cannot be crawled and indexed.

Conclusion

Log file analysis is a powerful tool for technical SEO, providing deep insights into how search engine bots interact with your site. By identifying issues and optimizing your site based on this data, you can improve your website's crawl efficiency, fix errors, and ultimately enhance your search engine visibility. Regular log file analysis should be a part of your ongoing SEO strategy.

FAQs

How often should I perform log file analysis?

It depends on the size and activity level of your website. For smaller sites, a quarterly analysis might suffice, while larger sites with frequent updates might benefit from monthly or even weekly analysis.

What should I do if I find a lot of 404 errors in my log files?

You should investigate why these 404 errors are occurring. Common causes include deleted pages without proper redirects, broken internal links, or changes in URL structure. Implementing 301 redirects can help resolve these issues.

Can log file analysis help improve page load speed?

Indirectly, yes. By understanding which pages are frequently crawled and identifying any bottlenecks or errors, you can optimize those pages to improve load times, which can positively impact user experience and SEO.

Is log file analysis necessary if I already use Google Analytics?

Yes, log file analysis provides data that Google Analytics does not, such as detailed information on how search engine crawlers interact with your site. This data is crucial for identifying and resolving technical SEO issues.

By following these steps and utilizing the recommended tools, you can gain valuable insights into your website's performance and make informed decisions to enhance your SEO strategy.